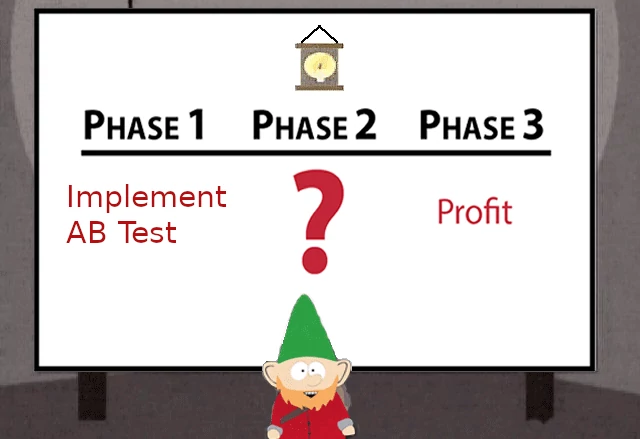

You have a great idea to improve the user experience in your app. Maybe it’s a redesign of the sign-up screen or a new layout for the purchase form. Now, you’re ready to validate that idea with an AB test.

Sounds simple, right? Just split your users into two equal groups (50/50), show one group the current version and the other your new design, and then measure which performs better. Easy, right? Well, there’s a bit more to it than that…

Reality is more complicated. Devil is in the details, there are so many nuances of how to do an AB test. Let’s go over them.

Measurement

Before starting implementing an AB test you need to think how you will measure it. In other words, how can you be sure that the new experience (B) is better than the old (A).

You need some metric. It can be the number of signups or purchases or downloads – depending on your test. This will be your primary metric. If you see a significant increase in that number when comparing B to A results – that means we can trust that new experience is better.

What is a significant increase? It should be big enough from a statistics and probability theory standpoint (if you want to learn more about this and what is p-value check this article) Simply put – given there were thousands of users in each group (A or B) there must be hundreds or at least dozens more in B to decide to go with that variation. Consult with your data analyst or drop me a line to discuss the exact formula. If statistics is not your thing – don’t worry. All popular AB tests analysis solutions have all required formulas in-built.

Did I just say thousands of users must be in the test? Yes, or at least several hundreds. So if your daily traffic at the concerned page (signup or purchase pages – that page you want to AB test) is 50 users, that means if you run the test for a month only up to 1500 users will visit that page. For some of the tests that can be not enough to have significant results. And what if your daily traffic is even lower? Then maybe you don’t need to run an AB test. As you surely don’t want the test to be running for half a year. No worries, there can be different ways to verify your hypothesis. We’ll talk about them in later posts.

Execution metrics can help to validate that you didn’t introduce any bugs and that the primary metric is trustworthy. Number of app crashes or errors can be such metric. If we see for some group it’s much higher – probably there is a bug in the implementation.

Implementation

Here’s how an AB test logic looks in a nutshell: When a user does a certain trigger she is randomly assigned to either of variations – A or B and gets corresponding experience. That assignment of a user is tracked – a dedicated analytic event is sent. All other user’s actions are tracked too. From the assignment moment to the end of the test the user should get the same experience.

Sounds simple, yet so easy to implement it wrong. Here are some typical implementation issues.

- User is assigned to an AB test before doing the trigger

Getting back to our example of purchase form, to illustrate it let’s say this form will open when the user clicks some Upgrade button. So the right moment to assign a user to a test is exactly when she clicks that Upgrade button. This is when we decide if she gets A or B experience. If you assign a user before that, for instance when you render the Upgrade button – that is wrong. She might never click that Upgrade button and so pollute our test data. - User’s experience is inconsistent

If someone was assigned to A (old experience) – she should always get the same experience when revisiting the app, it should be consistent. - User assignment event is not sent

Some user got B experience, but we missed sending assignment analytic event for her. Whoops 🙁 - Assignment imbalance

Let’s assume you wanted to exclude mobile web users from the test. For instance because the mobile screen size is too small to display the new experience. And so you excluded mobile users of B group. But you included mobile users of A variation. That is a mistake. As a result you will not have 50/50 allocation. It might get 45/55 or some other imbalance. That will lead to incorrect results of the test. Not to mention that mobile web users could be a group with a special intent and some effects (slow internet, small screen size, etc). Bottom line – assignment must be balanced. - Including into test users who should have been excluded

It’s safer to run an AB test on new users. This ensures users will get consistent experience and won’t be confused. Pay special attention to assignment configuration – and exclude existing users or some other group if needed.

Summary

I hope you are more prepared for your first AB test. My friendly advice – start with some moderate impact but quick to run AB test to warm up. Don’t start with the purchase form (that I used as an example in this post, sorry).

If you need some help – leave a comment or drop me a line – I’m intrigued to learn about your AB test and will try to help.

Good luck!

Leave a Reply